Welcome to the final installment in this series on real-time data infrastructure. In the last piece, we explored the architectural tradeoffs that emerge when trying to make lakehouses real-time, looking at the limitations of batch-first systems, the rise of open table formats, and emerging hybrid approaches like Streamhouse and Streaming-Augmented Lakehouse (SAL). While these innovations bring streaming and batch closer together, they still fall short of delivering a fully streaming-native experience.

Revisiting Kappa: The Ultimate Data Streaming Platform

In the previous piece, I referenced an article written in 2015 by Tyler Akidau. In it he argued that, “a well-designed streaming engine is a strict superset of a batch engine, capable of handling both bounded and unbounded datasets in a unified way.” He posited that there are two key requirements for robust streaming:

- Correctness: consistent stateful processing → gets you parity with batch

- Tools for reasoning about time: mechanisms for managing event time and handling late or out-of-order data → gets you beyond batch

Here I want to build on those concepts and flesh out an updated list of requirements for the modern data streaming platform (DSP):

Exactly‑once Processing (End to End)

Exactly-once processing ensures that each event in a streaming pipeline is processed one time only, even in the face of failures or retries. It's critical for maintaining correctness and consistency, however, it’s difficult to achieve because it requires careful coordination across ingestion, processing, and storage layers. To be effective, the entire pipeline must support durable state tracking, transactional sinks, and robust recovery logic - all while maintaining performance at scale. Today, modular components of the stack can do exactly-once processing (ie. Flink), but full end-to-end has not been achieved.

Streaming Materialized Views

A modern DSP must be able to maintain live, continuously updated query results (joins, aggregations, and windowed computations) without needing to reprocess entire datasets. This requires native support for incremental updates using stateful operators that track and update results as new data arrives. Achieving this reliably also means managing changelogs, replays, and late data correctly. Without this capability, systems fall back on batch-style recomputation, which increases latency and resource usage. Modern processors (like Materialize and RisingWave) support streaming materialized views today, but streaming + lakehouse setups still rely on expensive recomputes or scheduled batch jobs.

Unification of Batch + Stream Via Reprocessing

A key promise of streaming is that it can subsume batch by treating all data, past and present, as events on a timeline. To achieve this, a DSP must let teams replay historical data through the same real-time pipeline, enabling consistent logic across both live and backfill workloads. This requires a durable event log, deterministic operators, and the ability to reason about time (e.g. watermarks and event-time windows). In practice, this vision is still aspirational: many systems maintain separate batch and stream paths or require duplicate logic, though progress is being made through platforms like Flink, Dataflow, and hybrid engines.

Streaming-Native Storage

To be streaming-first, a system must support record-level inserts, updates, and deletes, not just periodic file dumps or append-only writes. At the same time, it needs to serve fast queries without degrading under heavy write volume. This balancing act is hard: most lakehouse storage formats (e.g. Iceberg, Delta) are optimized for large file-based operations and require compaction or snapshotting to stay performant. Truly streaming-native storage must allow small, continuous writes while preserving query performance and minimizing maintenance overhead. Today, systems like Apache Fluss, Apache Paimon and LogStore-based engines are experimenting in this space.

Lambda Persists in Machine Learning

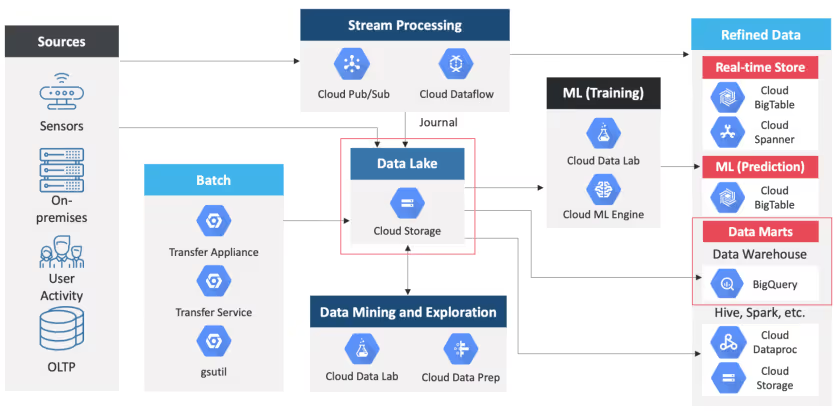

It is clear that we have come much closer to a pure data streaming platform as systems have evolved, but Lambda-style architecture still reigns supreme in machine learning. Building real-time ML pipelines is significantly more complex since it requires a streaming system for on-the-fly feature updates, and a batch system for historical feature generation and model training. A major reason for this is the role of feature stores, which are systems that transform raw data into model-ready inputs and store those features so they can be consistently used during both training and real-time prediction. Most feature stores today are still designed primarily around batch processing, since training workflows don’t typically require ultra-low latency and batch jobs remain more cost-efficient for large-scale historical computation. To manage the coordination between batch and streaming pipelines, many organizations have adopted the data lakehouse system discussed in the previous installment.

Typical Data Architecture in Google Cloud Platform (Source)

The key challenge in this architecture is ensuring consistency between training and serving. The features used during inference must match the historical state of data at training time - a characteristic known as point-in-time correctness. Without this, model training and serving can diverge, leading to degraded performance or unpredictable behavior. Modern infra tools have taken large strides to build robust feature stores and abstract the complexities of dual pipeline management. The following are two examples I mentioned in my first installment:

Tecton

Tecton is widely regarded as the incumbent in the feature store category. Emerging from the engineering team behind Uber’s Michelangelo platform, Tecton productized many best practices for managing ML features at scale like consistent feature definitions, historical backfills, and online/offline parity. It integrates with both batch and streaming data sources and automates the materialization of features for training and serving. Their core value lies in simplifying MLOps workflows for large teams by abstracting the orchestration of Spark, Kafka, and other backend tools. However, its architecture is still fundamentally Lambda-style, and that brings both complexity and cost. Its use of external engines makes performance tuning and debugging harder, and while Tecton has established itself in the enterprise, its pace of innovation appears to have stalled. For a category-defining product, it hasn't fully moved beyond being a managed feature store, which limits its potential in a market that increasingly demands more integrated and streaming-native solutions.

Chalk

Chalk, by contrast, represents a newer generation of infrastructure built for real-time ML from the ground up. Rather than just managing batch pipelines with streaming add-ons, Chalk treats feature engineering as a live, continuously updated process - offering richer support for streaming joins, versioned features, and point-in-time accuracy by default. The product experience is opinionated, developer-first, and feels tailored for modern, low-latency ML use cases. That said, Chalk faces a classic Goldilocks challenge. For smaller ML teams, it risks being too sophisticated or heavy for their needs. For larger, highly mature teams, there's always the possibility they’ll prefer to build and manage infrastructure in-house to optimize for performance, cost, or compliance. To succeed across the spectrum, Chalk will need to go beyond being “just a feature store” and prove that it can deliver end-to-end value, offering the flexibility, integration, and operational leverage required to compete in a category that’s increasingly moving toward unified, vertically integrated platforms.

A Stream-Native Future

We are currently in a phase of optimizing the dual-pipeline architecture, and I still believe there is immense value to be generated at this stage. Companies like Chalk are paving the way for a stream-native future and will be integral in helping legacy systems make the transition. However, I also want to share some exciting, early developments that may lay the foundation for a pure streaming platform.

Cold Start Streaming Learning

In November 2022, Cameron Wolfe and Anastasios Kyrillidis published a paper proposing a streaming-only ML method called CSSL (Cold Start Streaming Learning). CSSL enables deep neural networks to begin training from random initialization and update continuously, one example at a time, as new data arrives. Unlike most existing approaches that require offline pre-training and freeze much of the model during streaming, CSSL trains the entire network end-to-end using a replay buffer and a simple data augmentation pipeline. Despite its simplicity, CSSL outperforms several existing baselines across benchmarks like CIFAR100 and ImageNet and even performs well in multi-task settings, where different datasets are streamed sequentially.

This research provides a compelling proof-of-concept for the potential of streaming-only ML systems - where models can adapt in real time, from scratch, without relying on separate batch pipelines or costly retraining cycles. Of course, CSSL isn’t without limitations. It still depends on ample memory for replay buffers, which may not be viable for edge deployments, and it’s evaluated primarily in image classification tasks, which may not generalize to all domains (e.g., NLP, large-scale retrieval, or reinforcement learning). Still, the core idea that deep learning can be trained incrementally in a streaming fashion without sacrificing performance is a powerful one. As streaming data platforms evolve and real-time AI becomes the norm, work like CSSL lays the theoretical and practical groundwork for ML systems that are always on, always learning, and no longer tethered to batch.

Confluent’s Snapshot Queries

In May 2025, Confluent unveiled Snapshot Queries, a new capability that allows users to perform point-in-time analytical queries directly over Kafka streams without first offloading data to a separate batch system or data warehouse. Early access users can now write SQL queries against live and historical topics with strong time semantics, enabling debugging, feature validation, and ad hoc analysis - all from within the streaming ecosystem.

This feature is part of a much broader bet by CEO Jay Kreps (also developer of the Kappa Architecture): that the future of data infrastructure is streaming-native, and that streaming systems will eventually subsume the role of batch systems entirely. In his view, traditional lakehouses and warehouses are architectural relics of the batch era, and their reliance on slow, periodic data movement is incompatible with the demands of real-time AI systems and autonomous agents. Confluent’s long-term vision is to evolve Kafka into a streaming data platform that is not only a conduit for events, but also a system of record that supports real-time analytics, ML feature pipelines, and application logic all in one place.

While Snapshot Queries are still early in adoption and not yet a full replacement for lakehouse-scale compute, they represent an exciting move toward reducing pipeline fragmentation. If querying historical Kafka topics becomes fast, scalable, and expressive enough, it could dramatically simplify how teams build and debug ML and AI systems. As with CSSL, this signals a shift in mindset: instead of fitting streaming into a batch-first world, people are starting to ask what it would take to make streaming the world.

Our Thesis

Here are a few core theses that have emerged throughout the course of this research on how we believe real-time data platforms will evolve and compete over the next decade.

- Shift-left is coming, but there will be no singular architecture that fits all needs

The shift-left movement of bringing data processing, validation, and intelligence closer to the moment data is generated is well underway. As AI agents and real-time applications gain traction, organizations are increasingly prioritizing low-latency data pipelines and in-stream decision-making. However, despite this directional push, there will not be a one-size-fits-all architecture. Fraud detection, for example, may demand sub-second stream processing, while real-time personalization might combine streaming detection with batch-based enrichment. The architectural spectrum will remain diverse, and winning platforms will be those that can abstract complexity while remaining flexible enough to support hybrid use cases. Uniformity isn’t the goal, composability and adaptability are.

- Batch/streaming will eventually converge on price, so differentiation must come from UX

Historically, streaming has been more expensive and operationally complex than batch processing, which is why many teams defaulted to batch pipelines even when latency wasn’t ideal. But as cloud-native engines, serverless execution, and streaming-first formats mature, the cost differential between batch and stream is closing. In the future, choosing between batch and streaming won’t be driven by infrastructure cost, it will be driven by developer experience, observability, and ease of integration. Platforms that win will offer intuitive tooling, versionable pipelines, and real-time feedback loops that empower both data engineers and less technical users. The most powerful engine won’t matter if it’s hard to use, therefore UX will be the new differentiator.

- Modularity will beat out end-to-end platforms at scale

While end-to-end ML and data platforms promise simplicity, they often come with trade-offs in flexibility, extensibility, and cost control, especially for companies with mature data teams. As organizations scale, their needs become more nuanced: integrating specialized models, managing compliance, optimizing for latency or storage, and adapting to fast-changing AI workflows. Monolithic platforms struggle to keep pace. Modularity, composable infrastructure built around open interfaces, offers the long-term advantage. It enables teams to swap out parts of the stack without rewriting everything, experiment more freely, and tune for performance or cost in ways that closed platforms can’t match. Just as microservices replaced monolithic applications, modular data in ML architectures are poised to win at scale.

The age of real-time has already begun

Across this three-part series, we’ve traced the evolution of real-time data infrastructure from the early promises of streaming, through the rise of modern lakehouses, to today’s emerging vision of streaming-native platforms. In Part 1, we explored how the batch-to-streaming shift began, fueled by changing user expectations and the need for sub-second responsiveness. In Part 2, we looked at how the lakehouse emerged as a practical response to fragmented data systems, and how new architectural patterns like Streamhouse and SAL are bridging the divide between batch and stream. In this final installment, we explored what it would take to move beyond those bridges entirely: toward systems that are built from the ground up for real-time data, real-time ML, and real-time AI.

It’s clear we’re not there yet. Even the most advanced tooling today operates within some form of dual-pipeline complexity. Lambda-style architectures still dominate in machine learning, and streaming-only model training remains an early research frontier. But it’s also clear that momentum is building. From CSSL to Confluent’s Snapshot Queries, and from Chalk’s streaming-native feature engine to the maturing capabilities of platforms like Flink and Kafka, we are watching the foundations of a stream-native future being laid in real time. The shift is not about abandoning batch overnight, but about rethinking what becomes possible when streaming is the default, not the afterthought.

As we enter this next era, the real opportunity isn’t just technical, it’s strategic. Companies that embrace this shift early will be able to move faster, build smarter systems, and deliver richer real-time experiences. The platforms that succeed will not only meet technical requirements like exactly-once guarantees or streaming materialized views, they’ll also simplify the developer experience, support modular growth, and empower teams to act at the speed of data.

The age of real-time has already begun and the question now is: who’s ready to build for it?

If you’re building data infrastructure to support the impending explosion of real-time data use cases and are looking to raise your Series A or Series B, reach out to elevate@antler.co.

Or if you’re just getting started, Antler runs residencies across 27 cities globally. You can apply to the relevant location here.

.webp)

.avif)

%20(1)%20(1)%20(1)%20(1)%20(1)%20(1).avif)

.avif)