This article, co-authored with Chris Millisits and Laura Zhang, is the second in a three-part series on the future of real-time data infrastructure. In the last piece, we discussed the history of batch to stream processing, how the streaming data stack works, and the general landscape of infra tools. We also looked at some exciting new players aiming to simplify the complexities of building with older, open source software (i.e. Kafka, Flink).

Early Architectures: Lambda vs. Kappa

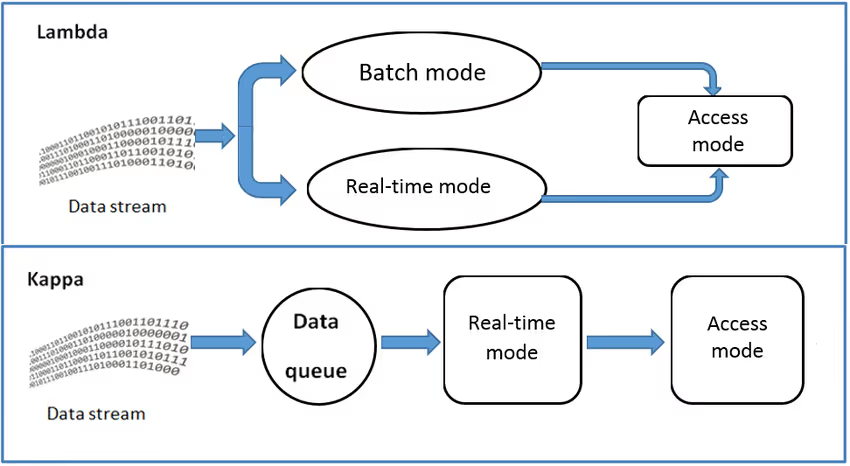

While many applications are entirely built on the modern stream processing stack today, early streaming technology still lacked the maturity, state management, and fault tolerance needed to replace batch processing entirely. Organizations wanted both accurate historical analysis and immediate insights, but there was no single system capable of doing both well. Therefore, the Lambda architecture was proposed by Nathan Marz around 2011 while he was working on large-scale data systems at Twitter. It was designed to handle both massive historical datasets and continuously arriving real-time events by splitting processing into two distinct pipelines:

- A batch pipeline that processes all available data periodically

- A streaming pipeline that processes only the most recent events in real time

- A serving layer that merges the outputs to give users a single, combined view

Although powerful for its time, Lambda came with significant operational challenges. Because it required two separate codebases and processing pipelines - one for batch and one for streaming - teams had to duplicate transformation logic in both layers, often in different frameworks. This “dual maintenance” created engineering overhead, increased the risk of inconsistencies between batch and speed results, and made debugging more complex. Additionally, the speed layer typically had to be designed for approximation and eventual reconciliation, which meant real-time outputs could differ from the later batch-corrected view.

As stream processing systems matured - offering exactly-once guarantees, persistent state, and the ability to replay historical data - many organizations moved toward the Kappa architecture. Coined by Jay Kreps (co-creator of Kafka, current CEO of Confluent) in 2014, Kappa eliminated the batch layer entirely. Instead, all data is treated as a stream, and the same pipeline is used both for real-time processing and for reprocessing historical data by replaying stored events from a durable log like Kafka. This reduced code duplication, simplified operations, and made it easier to evolve logic over time without managing two parallel systems.

The Kappa architecture laid the foundation for the “shift-left” principle. This concept borrowed from software engineering means pushing tasks earlier in the workflow so issues can be acted on sooner. In the streaming context, this translates to running analytics, transformations, and validations as data arrives, rather than waiting for downstream batch jobs. Proponents of this view argue that a strong streaming system can entirely replace batch processing, handling both real-time and historical workloads through event replay. (Tyler Akidau wrote a highly prescient piece in 2015 on what it will take for stream to completely supersede batch.)

Lambda vs. Kappa Architecture (Source: ResearchGate)

However, not everyone has embraced this full transition. Many organizations still find value in a hybrid approach, using elements of both Lambda and Kappa architectures - streaming for low-latency use cases and batch for heavy backfills, complex historical aggregations, or cost-efficient offline analytics. Notably, Lambda remains the most practical choice for ML pipelines today, since model training still relies heavily on large-scale batch processing.

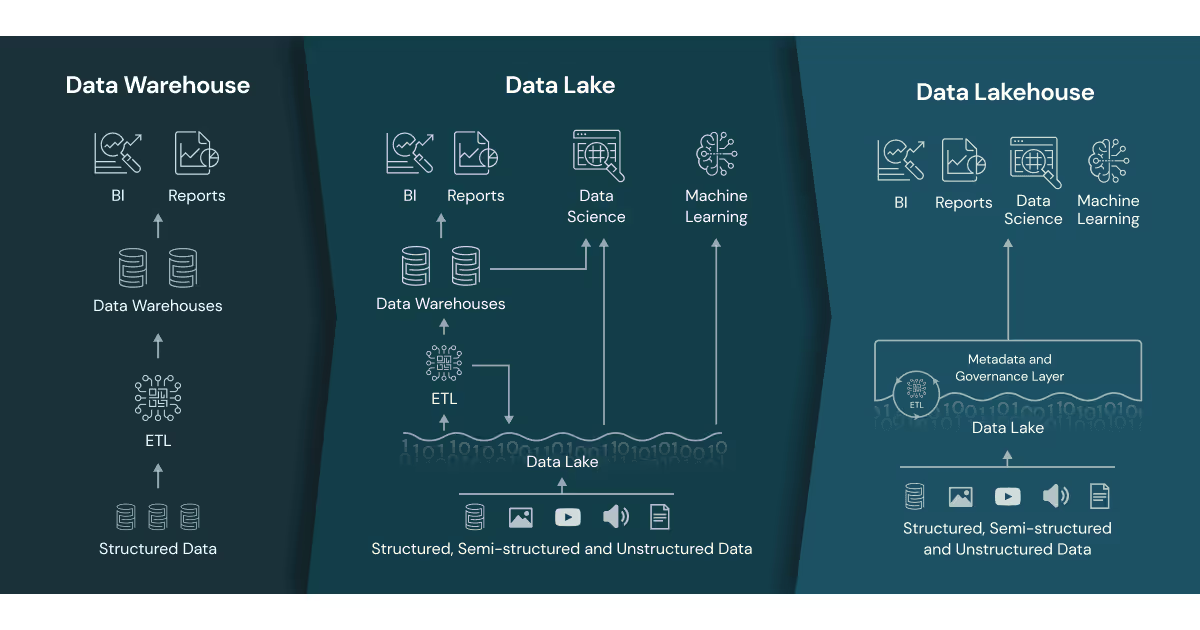

The Lakehouse Era

Introduced around 2017, the lakehouse model has transformed how organizations manage data, bridging the gap between the flexibility of data lakes and the structured query power of data warehouses. Traditionally, data lakes were optimized for storing massive amounts of raw, unstructured or semi-structured data (e.g., logs, images, JSON), while data warehouses were tuned for structured, relational datasets used in analytics and business intelligence (BI). This separation meant teams often had to maintain two different systems, duplicate data, and build complex ETL pipelines to move information between them. The lakehouse model eliminates that divide by allowing structured, semi-structured, and unstructured data to coexist in a single storage layer, enabling analytics, reporting, and machine learning on all data without shuffling it across systems.

A key enabler of the lakehouse era has been the emergence of open table formats like Apache Iceberg, which bring critical database-like capabilities to object storage. Iceberg supports ACID transactions, schema evolution, time travel, and partition management, ensuring that datasets remain consistent and queryable even as multiple engines and pipelines interact with them. This openness allows different compute frameworks (such as Apache Flink, Trino, Spark, or Dremio) to access the same data concurrently without risk of corruption or stale reads. For organizations working with both batch and streaming data, Iceberg can serve as the unifying layer that ensures interoperability, governance, and performance at scale.

The Data Lakehouse (Source: Databricks)

Builders and investors alike are incredibly excited about the future of lakehouse architectures. Earlier this year, the team at Bessemer put together a great piece on the latest lakehouse trends. Key insights related to streaming and real-time processing below:

- “As AI-driven data workflows increase in scale and become more complex, modern data stack tools such as drag-and-drop ETL solutions are too brittle, expensive, and inefficient, for dealing with the higher volume and scale of pipeline and orchestration approaches”

- “While batch engines have dominated previous architectural paradigms and will continue to play an important role in the ecosystem, we also anticipate that data processing will progressively “shift left” to be closer to the time of action.”

- “As organizations mature their AI capabilities, we'll see the emergence of specialized stream processing patterns optimized for model serving and continuous learning pipelines that blend historical batch data with real-time signals for more accurate predictions and recommendations.”

The first point speaks directly to the simplicity vs. customizability tradeoff I introduced in my first installment of this series. Modern abstractions have undeniably increased access to streaming pipelines for smaller teams with little data engineering expertise. However, the out-of-the-box nature of these products leaves companies vulnerable to the rapidly evolving implications of AI/ML. We need a simple and flexible solution. And the notion I’d like to challenge is whether the data lakehouse is truly the best architecture to lead this charge.

Limitations of the Lakehouse

While the lakehouse model has closed the gap between data lakes and warehouses, it is still not inherently streaming-first. Open table formats were originally built with batch processing in mind and they excel at organizing and querying large, static datasets, but struggle to deliver the low-latency freshness required in high-velocity, streaming environments.

The following are key real-time limitations of lakehouses:

- Limited streaming scope: While many lakehouses can continuously ingest data from a stream (e.g., Kafka or Flink), most cannot handle the entire stream processing pipeline internally. This means they’re mainly storing incoming data rather than transforming, enriching, or joining it in real time. True end-to-end streaming would allow transformations to happen as data arrives, without relying on a separate processing system.

- High recomputation costs: In systems without native streaming materialized views (precomputed query results that update continuously), even small data changes often require re-running the entire query from scratch. For large datasets, this can cause unnecessary delays (latency) and high CPU/memory usage, because the system is repeatedly scanning and recalculating over unchanged data.

- Pipeline fragmentation: Because lakehouses aren’t optimized for in-place real-time processing, the “live” parts of a data pipeline (real-time aggregations, anomaly detection, personalization) often run in separate streaming systems. The lakehouse is then used only for storing results or running batch analytics. This leads to multi-system architectures that are harder to maintain, monitor, and troubleshoot.

- File-oriented bottlenecks: Lakehouse table formats store data in large, immutable files (e.g., Parquet). Streaming engines, on the other hand, work in continuous state (processing small batches or single records as they arrive). To store stream data in a lakehouse, it must be grouped and written as files, which introduces commit latency (waiting to finish a file before making it queryable) and compaction overhead (periodically rewriting small files into larger ones for efficiency).

- Freshness gaps for operational AI: Achieving record-level updates with second-level or sub-second latency is still difficult at lakehouse scale. This means that operational AI systems (like fraud detection, instant recommendations, or decision-making agents) may be working with data that’s already outdated. In these scenarios, even a delay of a few seconds can reduce the quality or accuracy of results.

Streamhouses and Streaming-Augmented Lakehouses (SAL)

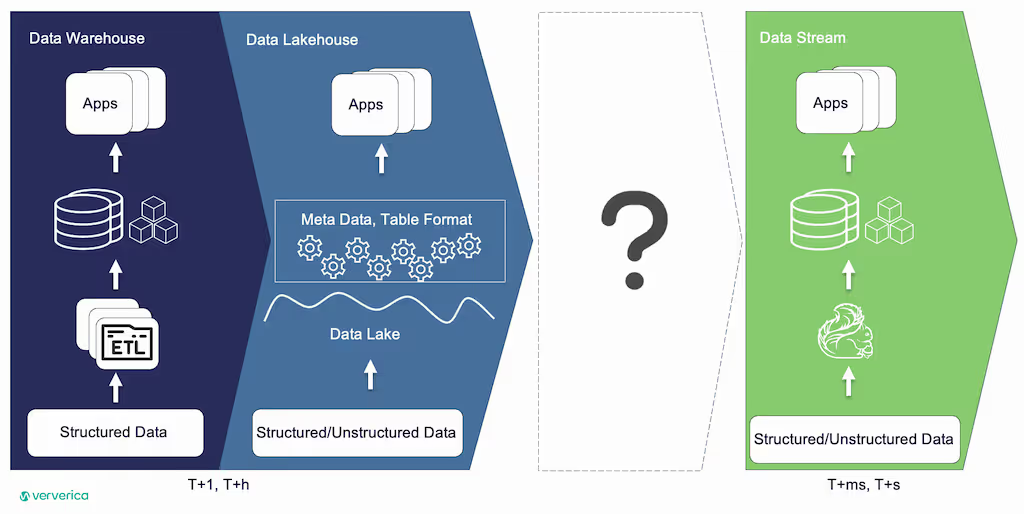

In response to these shortcomings, some companies have attempted to bridge the gap between Data Lakehouses and pure Data Streaming Platforms (ie. the Kappa Architecture) with hybrid architectures.

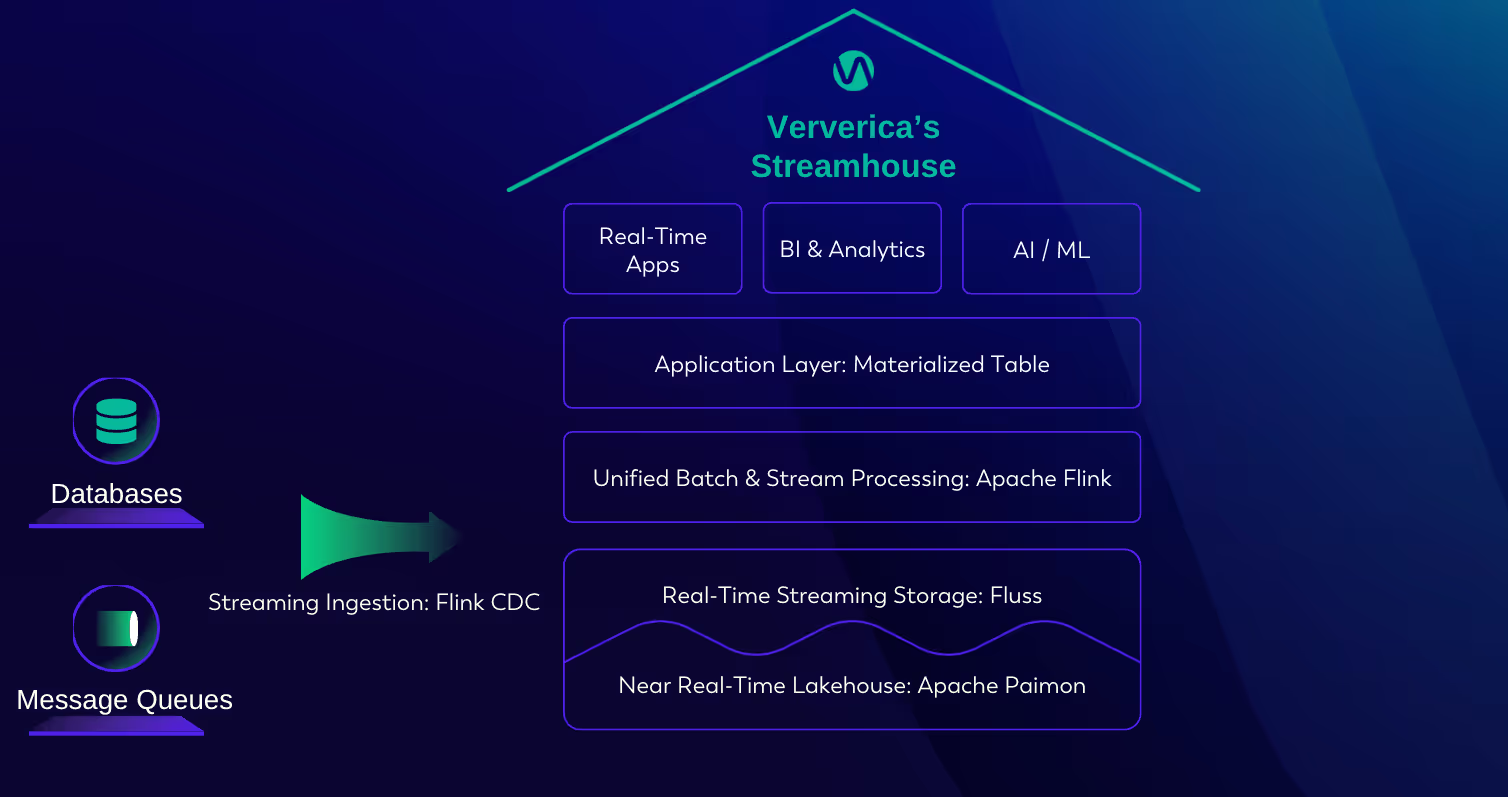

Gap Between Lakehouse and Streaming (Source: Ververica)

Streamhouse was officially introduced by the Head of Engineering at Ververica, Jing Ge, in October 2023. Streamhouse places a streaming-first layer between live stream processing and the lakehouse itself. It integrates Apache Flink, Flink CDC, and the Apache Paimon table format into a unified architecture that blends continuous pipelines with structured storage. Apache Paimon and Apache Iceberg are both open table formats, but Paimon is designed to be streaming-native with support for continuous, incremental writes and updates, while Iceberg is batch-oriented at its core, optimized for large, immutable file snapshots and periodic commits. In Streamhouse, data flows from CDC sources into Flink, which processes events in real time and writes incrementally to Paimon tables, turning the lakehouse into a live, queryable store for near-real-time analytics. The Streamhouse approach preserves the benefits of lakehouse storage (ACID, schema evolution) while ensuring the pipeline itself is streaming-native, supporting both real-time processing and historical analytics in one cohesive system.

On the other hand, some companies believe in preserving more of the core batch-orientated lakehouse architecture to maintain cost efficiency. Introduced by StreamNative CEO, Sijie Guo, in an October 2024 blog post, the Streaming‑Augmented Lakehouse (SAL) builds on the lakehouse foundation by layering real‑time streaming into it, rather than replacing it. SAL maintains the lakehouse, powered by open formats like Iceberg and Delta, as the central repository for data, while augmenting it with live data streams to deliver lower-latency updates alongside robust structure and governance. A core enabler of this system is the Ursa Engine, which ingests streaming data (e.g., from Kafka or Pulsar) directly into Iceberg tables, automatically compacting files and making new data immediately accessible to downstream analytics or AI systems like Snowflake via open catalogs. SAL achieves a balance between lakehouse-first thinking and near real-time freshness by treating streaming as a complement to, rather than a replacement for, batch workflows.

Is batch needed at all?

The evolution from Lambda to Kappa, and from warehouses to open lakehouse formats like Iceberg, reflects an industry-wide push toward fresher, more flexible data systems. In this piece, we explored how emerging architectures like the Streamhouse and Streaming-Augmented Lakehouse (SAL) are narrowing the gap between the reliability of lakehouses and the immediacy of streaming systems. These solutions offer promising steps forward: Streamhouse reimagines the pipeline as streaming-native from the ground up, while SAL enhances traditional lakehouses with faster ingestion and query readiness. Both bring real-time processing closer to the core of data infrastructure.

Yet despite these innovations, fundamental limitations remain. Lakehouses still rely on file-based storage and batch-optimized execution models, which can introduce latency, complexity, and compute overhead when used for truly real-time applications like fraud detection, GenAI agents, or continuous model evaluation. As streaming technologies mature and become simpler to operate, the question is no longer just how to bolt streaming onto batch, but whether batch is needed at all.

In the next and final installment of this series, I’ll explore what a fully streaming-first future might look like: one where real-time is not an add-on, but the default. We'll look at the platforms pushing this vision forward and the implications for everything from infrastructure design to AI-native product development.

If you’re building data infrastructure to support the impending explosion of real-time data use cases and are looking to raise your Series A or Series B, reach out to elevate@antler.co.

Or if you’re just getting started, Antler runs residencies across 27 cities globally. You can apply to the relevant location here.

.webp)

.avif)

%20(1)%20(1)%20(1)%20(1)%20(1)%20(1).avif)

.avif)