This article, co-authored with Chris Millisits and Laura Zhang, is the first in a three-part series on the future of real-time data infrastructure. In this first installment, we explore how the space has evolved and provide a foundation for understanding the challenges and opportunities ahead.

Real-time data refers to information that is created, transmitted, and processed within milliseconds or seconds of being generated. From fraud alerts in banking apps to instant driver matches in ride-sharing, real-time data already powers many of the digital experiences we rely on daily. But its role in AI is even more transformative, and just beginning. Imagine chatbots that adapt their tone mid-conversation, or product recommendations that respond to your live browsing activity.

The scale of data creation is staggering. We now generate over 400 million terabytes of data per day, with a growing share of that produced and consumed in real time. The global data ecosystem is projected to reach 181 zettabytes by 2025, driven by mobile devices, connected sensors, and AI-enabled applications. In Q2 2024 alone, over $2.7 billion was poured into data infrastructure startups, many of them focused on streaming, observability, and AI-native data platforms.

In AI systems, the difference between real-time and batch data can mean the difference between a static prediction and an adaptive, context-aware response. As more companies aim to build AI that is personalized, conversational, or situationally aware, real-time data becomes the key input that makes those capabilities possible.

History: Batch to Stream Processing

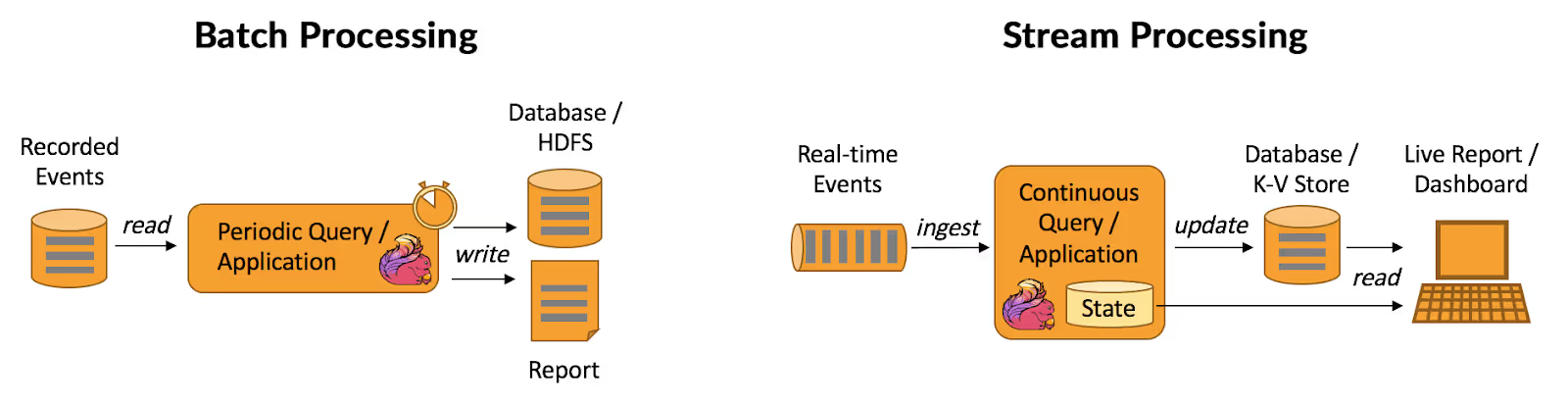

For years, batch ingestion was the default approach to working with data. Organizations would collect large volumes of information, process it at scheduled intervals (often nightly or hourly), and use the results to generate reports, power dashboards, or update models. A core component of this workflow was ETL (Extract, Transform, Load), a process that moved data from source systems into data warehouses only after cleaning and formatting it. While reliable, ETL often required teams to build and maintain complex chains of transformation scripts across multiple systems. This was sometimes called “transformation spaghetti,” since logic became scattered, duplicated, and difficult to debug or change.

By the late 2010s, the rise of cloud data warehouses such as Snowflake, BigQuery, and Redshift, combined with plummeting storage costs, enabled a new approach: ELT (Extract, Load, Transform). Pioneered by Fivetran around 2020, ELT flipped the order, loading raw data directly into the warehouse first and then applying transformations inside that centralized environment. This consolidation pushed heavy computation onto the highly scalable infrastructure of the warehouse itself, simplified pipeline maintenance, and allowed teams to re-run or adapt transformations without re-extracting source data.

However, even with ELT’s flexibility and scalability, both ETL and ELT in their traditional form were still bound to batch schedules. Data was only as fresh as the last load job, meaning anything happening between runs was invisible until the next cycle. As businesses began demanding real-time insights, these inherent latency gaps exposed the limits of batch-based systems and set the stage for the rise of true real-time data processing.

Real-time streaming began gaining traction in the early 2010s, as companies sought to power features like fraud detection, personalized recommendations, and live analytics. Unlike batch, streaming involves continuously ingesting, processing, and analyzing data as it is generated, enabling near-instant insights and responses. Early adopters were typically large tech firms or well-funded startups with deep engineering resources. Tools like Apache Kafka and Spark Streaming offered powerful capabilities but required complex infrastructure and specialized backend knowledge. As a result, real-time systems remained out of reach for most companies.

A major turning point came around 2018, when cloud providers like AWS, Google Cloud, and Azure introduced managed streaming services. These offerings drastically lowered the barrier to entry by removing the need to maintain servers or scale infrastructure manually. At the same time, new tools with simplified, SQL-like interfaces made it easier for data teams to work with streaming data. This shift opened the door for a broader range of companies, not just tech giants, to experiment with real-time use cases.

In recent years, low-code and no-code platforms such as Tinybird and Estuary have made real-time data processing even more accessible, empowering non-engineers to build and query streaming data flows. However, they still rely heavily on the underlying infrastructure (ie. data ingestion engines, stream processors, storage systems) which remains intricate and often fragile. As the volume and velocity of real-time data grow, so do the risks of inefficiencies, bottlenecks, and failures beneath the surface. While these tools simplify the developer experience by abstracting away much of the complexity, they are only as effective as the infrastructure they’re built on. This points to a critical area for continued innovation: how do we make the foundational data stack more robust and scalable?

Inside the Real-Time Data Infrastructure Stack

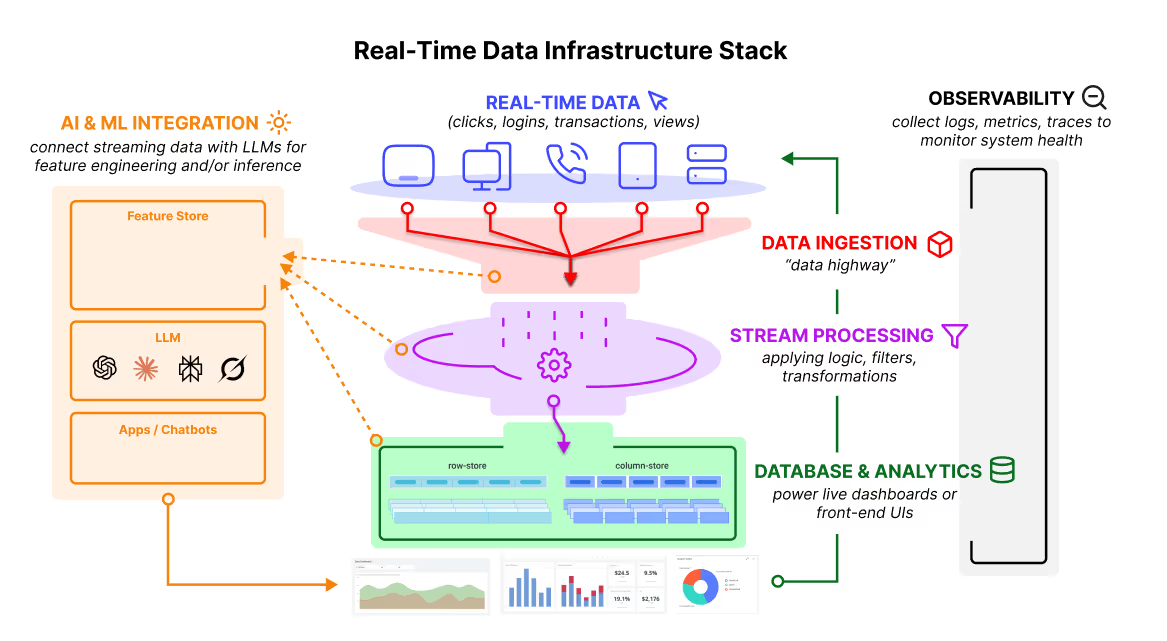

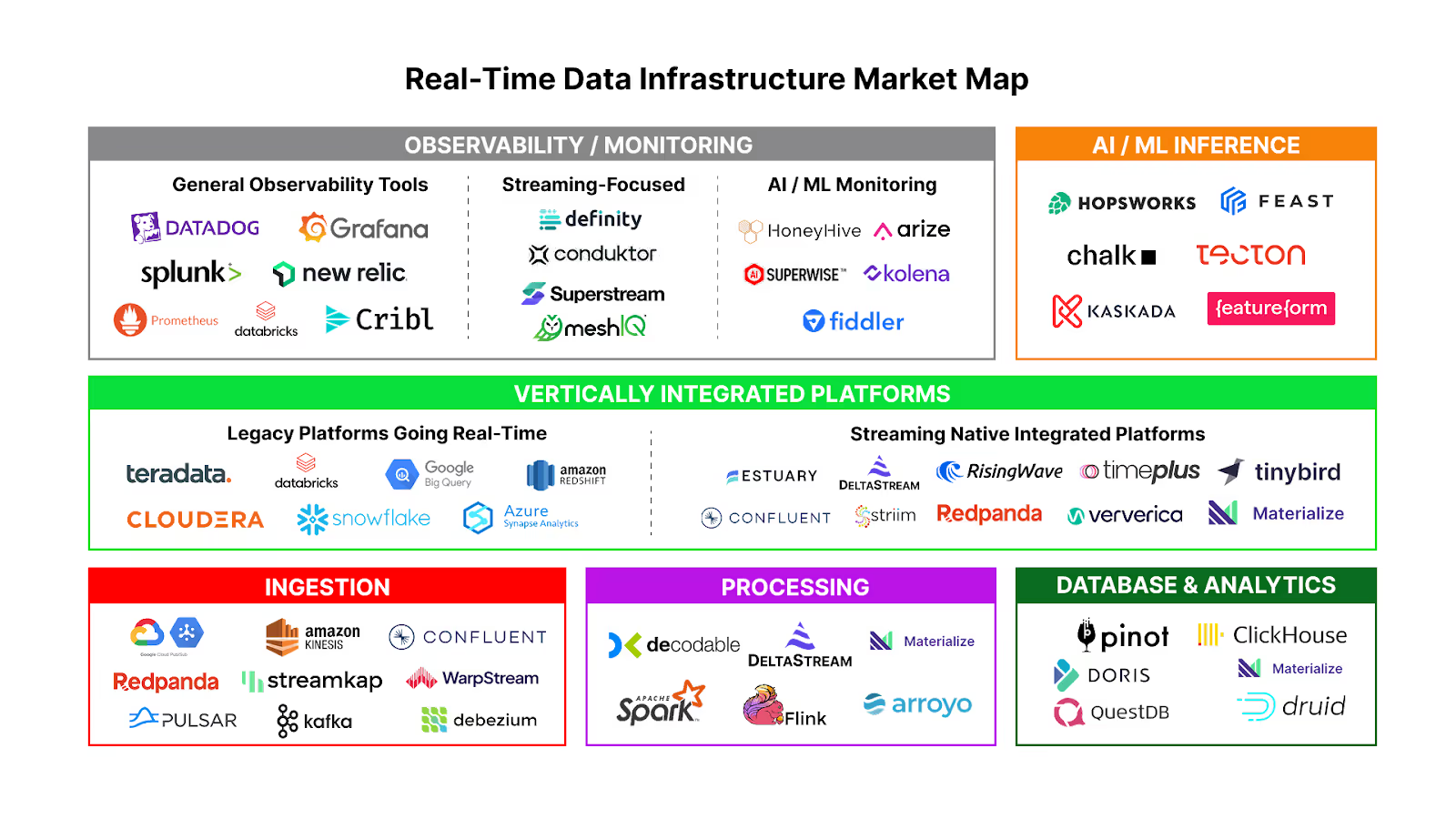

First, we must understand how the different layers of the real-time data tech stack interact with each other. The stack can be broadly divided into 5 main components: (1) Data Ingestion & Messaging, (2) Stream Processing Engines, (3) Real-Time Databases & Analytics, (4) Observability & Monitoring, and (5) AI/ML Integration.

(1) Data Ingestion & Messaging

This is where raw data enters the system: clicks, logins, transactions, sensor readings etc. Data is captured from various sources (apps, devices, services) and moved in real time through tools like Apache Kafka, Amazon Kinesis, or Google Pub/Sub. These systems act like highways that efficiently transport streaming data from where it’s generated to where it needs to go next.

(2) Stream Processing Engines

Once the data is flowing, stream processing engines (like Apache Flink or Spark Streaming) apply business logic to it on the fly. These tools filter, clean, and transform the data - for example, identifying patterns, aggregating stats, or flagging anomalies - before storing it or passing it to another system. This step turns raw inputs into actionable, structured information.

(3) Real-Time Databases & Analytics

After data is processed, it typically lands in a real-time database, which enables fast querying and access for downstream applications and/or data warehouses for long term storage. Note that some vendors use “real-time” to mean fast queries, even if the database isn’t built for continuous streaming ingestion or keeping data fresh at low latency. Therefore, we will strictly define real-time databases as having the following characteristics:

- Natively support high-throughput streaming ingestion (Kafka, Pulsar, Kinesis)

- Maintain low query latency (sub-second to a few seconds) while ingesting at scale

- Keep data fresh continuously - no big batch index rebuilds before data is queryable

- Integrate with stream processors (Flink, Spark, Materialize) or act as one itself

Real-time databases generally utilize two different storage / querying methods:

- OLTP (Online Transaction Processing) systems, often row-stores, are optimized for fast, small reads and writes - ideal for powering transactional user-facing features like search, personalization, or fraud detection

- OLAP (Online Analytical Processing) systems, often column-stores, are optimized for fast analytical queries over large volumes of data - commonly used in dashboards, metrics, and reporting

Modern real-time databases blend aspects of both OLTP and OLAP, depending on the use case. Some prioritize low-latency access for end-user applications, while others focus on delivering up-to-date analytics at scale. In either case, they serve as the layer that brings real-time data to life, powering everything from live dashboards to recommendation engines and collaborative app experiences.

(4) Observability & Monitoring

To ensure the system is healthy and reliable, observability tools collect logs, metrics, and traces across the stack. These tools (like Datadog, Grafana, or Prometheus) help teams monitor performance, detect issues, and debug failures in real time - which is especially critical when dealing with high-speed, continuous data flows.

(5) AI / ML Integration

Real-time data becomes even more powerful when connected with AI systems. Feature stores act like organized libraries of signals, such as recent logins or items in a cart, that models rely on to make accurate predictions. By keeping these signals continuously updated, they ensure models use the freshest information. With real-time inference, models apply that information instantly as new data arrives, enabling decisions in the moment: adjusting prices dynamically, recommending products as someone browses, or flagging a suspicious transaction as it happens. These AI outputs can then flow back into apps or chatbots to create responsive, intelligent experiences.

This space has definitely blown up in recent years, with growing adoption of streaming-native feature engineering and real-time feedback loops in areas like fraud detection, recommendation systems, and supply chain optimization. New tools are also emerging to support continuous model retraining, helping teams close the gap between data ingestion and action. That said, real-time AI remains an early, but fast-evolving space. Barriers to integration, especially around data orchestration, model latency, and observability, still make implementation difficult at scale. We’ll do a deeper dive into how AI / ML systems incorporate real-time streaming pipelines and the challenges associated with those workflows later in this series.

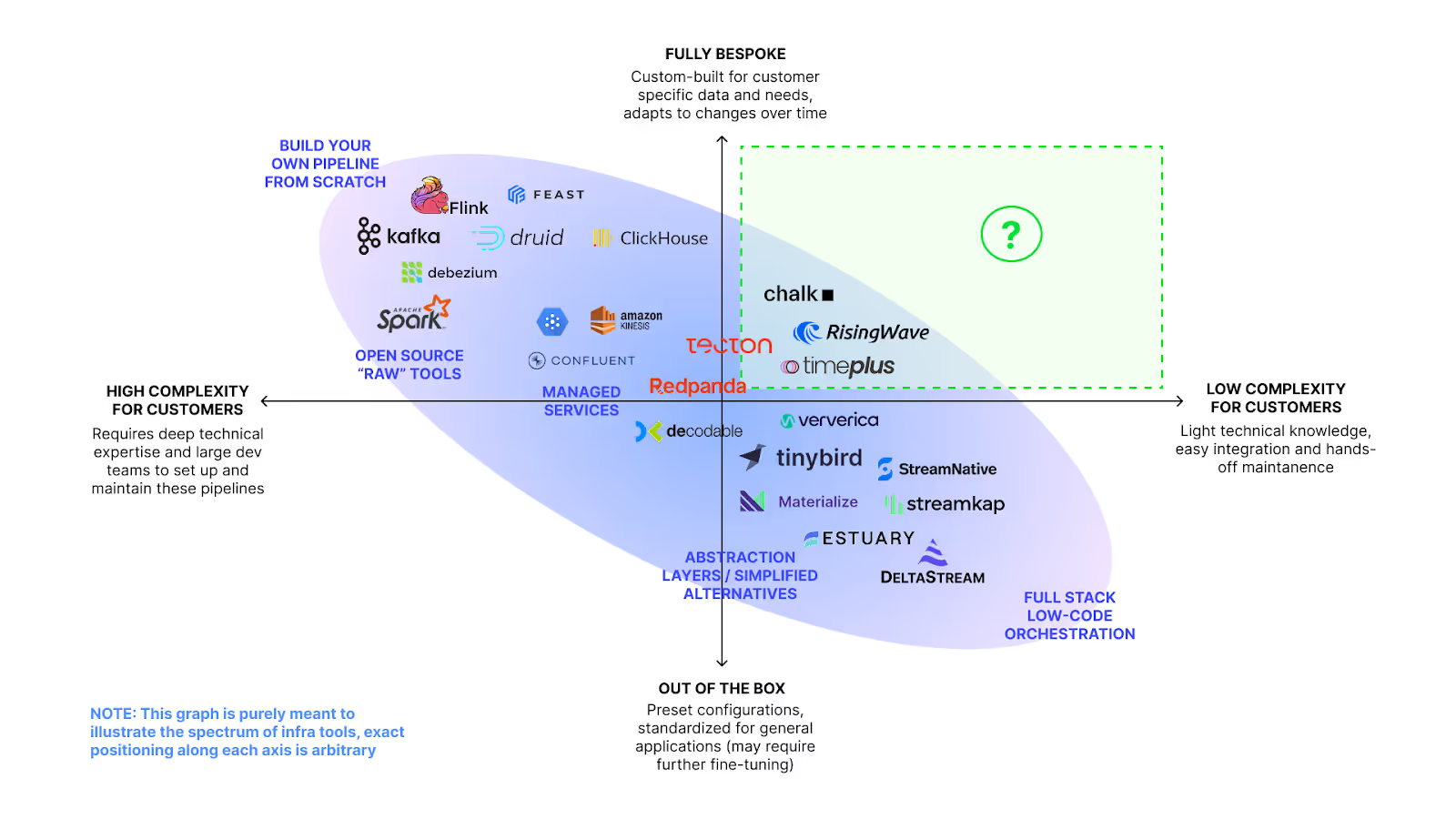

Visualizing the Landscape: Incumbents vs. New Players

Over the past decade, the real-time data infrastructure ecosystem has evolved from low-level, open-source tools to simpler, out-of-the-box solutions. At the top left of the landscape are traditional, widely adopted tools like Kafka, Flink, and Spark - powerful, but complex to implement and maintain. These systems can be deeply customized to fit exact business needs, but they require significant technical investment and can become brittle over time.

As an alternative, some vendors began offering managed services (Google Pub/Sub, Amazon MSK, Confluent Cloud) which offer hosted versions of core infrastructure components. These platforms take on much of the operational burden, i.e. handling tasks like cluster setup, scaling, upgrades, and fault tolerance, so teams don’t have to manage the raw complexity of Kafka or Flink themselves. However, these services still require users to design and manage data pipelines, configure stream processors, and write custom code for transformations.

The latest wave of platforms is both abstracting away even more complexity and vertically integrating the stack. Instead of chaining Kafka for ingestion, Flink for processing, and Pinot or Druid for analytics, products like Materialize and RisingWave offer a single, unified environment for ingestion, processing, and querying. These tools are giving smaller teams the ability to build streaming pipelines with speed while allowing larger teams to reduce operational overhead and latency. More broadly, the industry is moving from the top-left of the landscape; high-complexity, build-it-yourself tools, toward the bottom-right, where out-of-the-box platforms offer easier integration and hands-off maintenance. The tradeoff is that greater simplicity often comes at the cost of flexibility, with less room for deep customization or fine-grained performance tuning.

Here are just a few of the new players in this landscape that I’m excited about:

Ingestion / Stream Processing: Rather than exposing users to the full operational surface area of tools like Kafka (ingestion) and Flink (stream processing), these platforms focus on abstraction through familiar interfaces and managed infrastructure.

- Streamkap (Founded 2022): Ingestion platform built on Kafka that handles schema evolution, change data capture (CDC), and scaling - enabling teams to quickly sync source databases (like Postgres or MySQL) to downstream systems without dealing with Kafka directly. Its value lies in helping companies make a seamless transition from batch ETL pipelines to streaming.

- DeltaStream (Founded 2020): SQL-native stream processing engine that utilizes Flink (streaming), Spark (batch), and Clickhouse (real-time analytics) under the hood. It shifts processing upwards by letting users define complex transformations (joins, filters, aggregations) before data is sent to warehouses like Snowflake. In this scenario, ETL rather than ELT is more cost effective for the sheer volume of streaming data.

- Arroyo (Founded 2022, Acquired by Cloudflare 2025): Cloud-native stream processing engine that allows users to define streaming pipelines in SQL and deploy them anywhere with zero ops hassle. Delivers much higher performance than legacy systems (~5x faster than Apache Flink).

Databases: While legacy OLAP engines like ClickHouse (founded 2016) continue to evolve, a new generation of startups has emerged to rethink what a real-time database can look like, prioritizing developer experience, cloud-native design, and in many cases integrating across the stack.

- Timeplus (Founded 2021): Streaming-first analytics platform that combines stream processing and real-time OLAP querying in a single engine, enabling sub-second analytics on both live and historical data. It is designed to replace Flink and can be used to serve dashboards, alerts, and ML features without a separate online store.

- RisingWave (Founded 2021): Streaming-first SQL database similar to Timeplus in that it unifies stream processing and low-latency querying, but it differs by offering backend persistence into open formats like Apache Iceberg. This makes it easier to integrate with lakehouses and long-term storage, bridging real-time analytics with broader data platforms.

- Tabular (Founded 2021, Acquired by Databricks in 2024 for $1B): A cloud-native, open table storage platform for unifying batch and streaming analytics. It supports multiple compute frameworks (Spark, Flink, Trino, Snowflake) while delivering managed ingestion, performance tuning, and built-in security.

Note: We’ll get more into the implications of the “Data LakeHouse Era” for streaming and batch architectures in the next installment.

Observability: As systems become more distributed and event-driven, observability has evolved beyond traditional logging and monitoring into a real-time, context-rich feedback layer. New platforms aim not just to surface metrics, but to help teams understand system behavior, diagnose issues quickly, and even automate remediation. MeshIQ is a legacy player in the space, focusing specifically on observability for messaging and streaming pipelines (Kafka, Solace, IBM MQ), giving enterprises visibility into middleware performance and transaction flows.

- Superstream (Founded 2022): AI-powered optimization platform for Kafka that continually analyzes both clusters and client behavior to drive smarter, leaner operations. It automates tuning and remediation tasks, enabling up to 60% cost savings, reduced latency, and stable throughput with zero code changes required.

- Definity (Founded 2023): Definity brings real-time observability to data pipelines, embedding monitoring and alerting directly into Spark, lakehouse, and streaming workflows. It tracks data quality, performance, and lineage across jobs, helping teams catch bottlenecks, schema issues, or freshness gaps before they impact downstream systems.

- Conduktor (Founded 2021): Self-hosted, centralized Kafka operations platform designed for operational data and AI use cases. Conduktor combines an intuitive UI for Kafka cluster management with a Gateway proxy that enables traffic control, data quality enforcement, security policies, and governance.

AI / ML Integration: One of the earliest tools in this space was Feast, an open-source feature store introduced in 2019 that helped teams manage and serve ML features consistently across training and inference. While foundational, Feast has struggled to evolve beyond its batch-first architecture and limited support for real-time transformations. Tecton was released a year later, offering a managed feature platform designed to unify real-time and batch data pipelines. Tecton introduced important abstractions for productionizing ML features, but has also been criticized for its operational complexity and high integration overhead, especially for teams seeking lightweight or real-time-first workflows.

- Chalk (Founded 2021): Chalk is a developer-first real-time feature platform that focuses on defining, managing, and serving ML features through a familiar code-first interface. It emphasizes low-latency inference and observability, allowing teams to ship features quickly without deep infra investment. Chalk differentiates itself with a strong focus on the developer experience, particularly for teams deploying real-time models in production.

- Fennel (Founded 2022, Acquired by Databricks in 2025): Real-time feature engineering platform built in Rust with a Python interface. It enables teams to author, compute, store, serve, monitor, and govern both streaming and batch ML feature pipelines with ease. Fennel’s key advantage is its Python-native declarative pipelines (no Spark/Flink required).

Conclusion

The real-time data infrastructure landscape has undergone a remarkable transformation, from the early days of batch ETL pipelines and heavyweight stream processors, to today’s growing ecosystem of plug-and-play platforms, cloud-native databases, and ML-integrated observability tools. These innovations have significantly lowered the barrier to entry, enabling smaller teams to experiment with real-time use cases that were once the domain of tech giants.

Yet despite this progress, real-time systems still carry a high cost in complexity. Many of today’s solutions either oversimplify with rigid defaults or demand deep expertise to customize. What’s missing is a new generation of tools that strike the right balance: flexible and composable, but without the operational overhead that has historically slowed adoption.

That grey space - where ease of use meets architectural control - represents one of the most important frontiers in this space. In the next installment of this series I’ll do a deep dive on the challenges in unlocking real-time data for AI / ML at scale.

If you’re building data infrastructure to support the impending explosion of real-time data use cases and are looking to raise your Series A or Series B, reach out to elevate@antler.co.

Or if you’re just getting started, Antler runs residencies across 27 cities globally. You can apply to the relevant location here.

.webp)

.avif)

%20(1)%20(1)%20(1)%20(1)%20(1)%20(1).avif)

.avif)